Cache Coherence Protocol Simulator

| Parallel Execution | Cache Coherence | C++ |

Parallelizing instructions can often speed up the execution time of programs. CPU are designed to have their own share of cache so that memory access can be fast. Having multiple CPU hold the same data at the same time created the problem of race condition on the hardware level. This problem is official addressed as cache coherence.

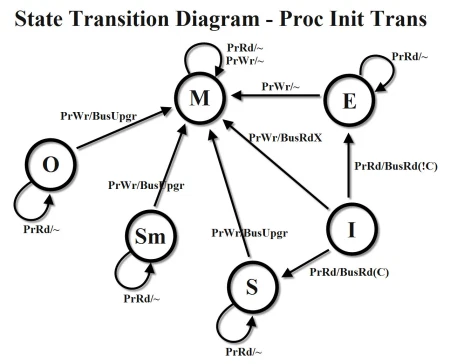

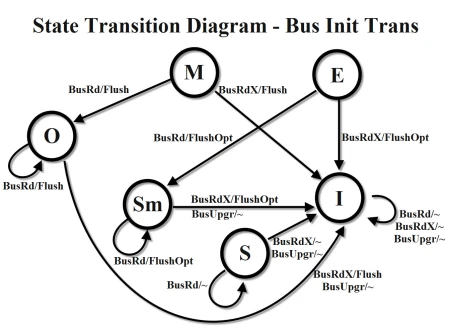

This simulator look at the snoop based cache coherence protocol. The project implemented MSI, MESI, MOESI, and MOESIF. In simple terms, based on their cache accesses, CPUs pass message on a shared message bus to notify other CPUs that have the same cache to change their own state of cache, (e.g. stale or shared). Here is a good link of what the protocols does Link